Today I attended the IRILL days here in Paris. IRILL’s goal is to bring together high level researchers and scientists, FOSS (Free and Open Source) developers, and FOSS industry players to tackle the three fundamental challenges that FOSS poses:

- scientific: study problems raised by the development and maintenance of FOSS code

- educational: adapt the teaching curriculum to FOSS

- economic: help create a sustainable ecosystem for FOSS innovations

The most interesting part of the conference for me was Debian package dependency management, which currently is done by a number of ad-hoc algorithms, a problem that the Mancoosi Project is tacking. Essentially, there are a number of program packages that need different libraries and services, and we need to decide which ones we need to install in order to satisfy those dependencies when installing/upgrading a package. This dependency graph and the package to be installed/upgraded is capture in a language called CUDF (complete PDF description here). When deciding which packages to retain,upgrade or install, there are some goals which we must achieve, such as minimising the number of new packages as much a possible: the different optimisation criterias are described here. There is a competition associated with this computationally difficult problem, the MISC Competition, which was first conducted this year, during the FLoC conference.

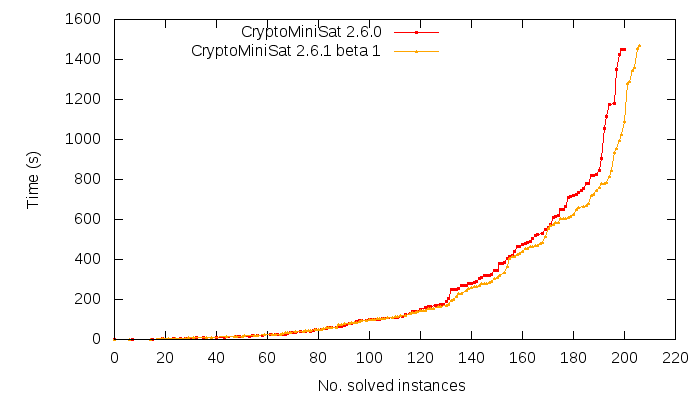

Interestingly, the problem of package dependencies maps very well to problems faced by industry: configuration management. For instance, many high-end car models have thousands of options. Some of these options are incompatible, and some of them depend on other options being taken. For example, it may be necessary to take a certain color scheme to apply certain figurative patterns on the car. Although this sounds trivial, once the number of options go up, the complexity increases at a typically exponential rate, after which finding suitable solutions for customers might become a real challenge. For example, I wouldn’t be surprised if a modern airplane such as the A380 had customer options approaching the tens of thousands. Managing this automatically is a really interesting and a very real challenge, in the solution of which I hope CryptoMiniSat will be able to participate in.

Documenting code is not always so much fun. However, in order for the code to be extended by others, documentation needs to exist. Since I conceived

Documenting code is not always so much fun. However, in order for the code to be extended by others, documentation needs to exist. Since I conceived