Many modern SAT solvers do a lot of what’s called inprocessing. These steps simplify the CNF into something that is easier to solve. In the compiler world, these are called rewritngs since the effectively rewrite (parts of) the formula to something else that retain certain properties, such as satisfiability. One of the most successful such rewrite rules for CNF is Bounded Variable Elimination (BVE, classic paper here), but there are many others. These rewrites are usually done by modern SAT solvers in a particular order that was found to be working well for their particular use-case, but they are not normally accessible from the outside.

Sometimes one wants to use these rewrite rules for something other than just solving the instance via the SAT solver. One such use-case is to use these rewrite rules to simplify the CNF in order to count the solution to it. In this scenario, the user wants to rewrite the CNF in a very particular way, and then extract the simplified CNF. Other use-cases are easy to imagine, such as e.g. MaxSAT, core counting, etc. Over the years, CryptoMiniSat has evolved such a rewrite capability. It is possible to tell CryptoMiniSat to simplify the formula exactly how the user wants the solver to be satisfied and then extract the simplified formula.

Example Use-Case

Let’s say we have a CNF that we want to simplify:

p cnf 4 2

1 2 3 4 0

1 2 3 0In this CNF, 1 2 3 4 0 is not needed, because it is subsumed by the clause 1 2 3 0. You can run subsumption using CryptoMiniSat this way:

#include "cryptominsat5/cryptominisat.h"

#include <vector>

#include <cmath>

#include <iostream>

using namespace CMSat;

using namespace std;

#define lit(a) Lit(std::abs(a)-1, a<0)

int main() {

Solver s;

vector<Lit> cl;

s.add_new_vars(4);

cl = vector<Lit>{lit(1), lit(2), lit(3), lit(4)};

s.add_clause(cl);

cl = vector<Lit>{lit(1), lit(2), lit(3)};

s.add_clause(cl);

s.simplify(NULL, "occ-backw-sub");

s.start_getting_clauses();

while(s.get_next_clause(cl) {

for(const auto l: cl) cout << l << " ";

cout << endl;

}

s.end_getting_clauses()

return 0;

}This code runs the inprocessing system occ-backw-sub, which stands for backwards subsumption using occurrence lists. The input CNF can be anything, and the output CNF is the simplified CNF. This sounds like quite a lot of code for simple subsumption, but this does a lot of things under the hood for things to be fast, and it is a lot more capable than just doing subsumption.

Notice that the first argument we passed to simplify() is NULL. This means we don’t care about any variables being preserved — any variable can (and will) be eliminated if occ-bve is called. In case some variables are important to you not to be eliminated, you can create a vector of them and pass the pointer here. If you have called the renumber API, then you can get the set of variables you had via clean_sampl_and_get_empties(). The numbering will not be preserved, but their set will be the same, though not necessarily the same size. This is because some variables may have been set, or some variables may be equivalent to other variables in the same set. You can get the variables that have been set via get_zero_assigned_lits().

Supported Inprocessing Steps

Currently, the following set of inprocessing steps are supported:

| API name | Inprocessing performed |

| occ-backw-sub | Backwards subsumption using occurence lists |

| occ-backw-sub-str | Backwards subsumption and strengthening using occurence lists |

| occ-bce | Blocked clause elimination (paper) |

| occ-ternary-res | Ternary resolution (paper) |

| occ-lit-rem | Literal removal via strengthening |

| occ-cl-rem-with-orgates | OR-gate based clause removal (unpublished work, re-discovered by others) |

| occ-rem-with-orgates | OR-gate based literal removal |

| occ-bve | Bounded variable elimination (paper) |

| occ-bve-empty | Bounded variable elimination of empty resolvents only |

| intree-probe | Probe using in-tree probing, also do hyper-binary resolution and transitive reduction (paper). Also does hyper-binary resoution & transitive reduction |

| full-probe | Probe each literal individually (slow compared to intree-probe) |

| backbone | Backbone simplification (cadiback paper) |

| sub-impl | Subsume with binary clauses with binary clauses (fast) |

| sub-str-cls-with-bin | Subsume and strengthen long clauses with binary clauses (fast) |

| sub-cls-with-bin | Subsume long clauses with binary clauses (fast) |

| distill-bins | Distill binary clauses |

| distill-cls | Distill long clauses (distillation paper) |

| distill-cls-onlyrem | Distill long clauses, but only remove clauses, don’t shorten them. Useful if you want to make sure BVE can run at full blast after this step. |

| clean-cls | Clean clauses of set literals, and delete satisfied clauses |

| must-renumber | Renumber variables to start from 0, in case some have been set to TRUE/FALSE or removed due to equivalent literal replacement. |

| must-scc-vrepl | Perform strongly connected component analysis and perform equivalent literal replacement. |

| oracle-vivify | Vivify clauses using the Oracle tool by Korhonen and Jarvisalo (paper). Slow but very effective. |

| oracle-vivif-sparsify | Vivify & sparsify clauses using the Oracle tool by Korhonen and Jarvisalo. Slow but very effective. |

Convenience Features Under the Hood

The steps above do more than what they say on the label. For example, the ones that start with occ build an occurrence list and use it for the next simplification stop if it also starts with occ. They also all make sure that memory limits and time limits are adhered to. The timeout multiplier can be changed via set_timeout_all_calls(double multiplier). The time limits are entirely reproducible, there is no actual seconds, it’s all about an abstract “tick” that is ticking. This means that all bugs in your code are always reproducible. This helps immensely with debugging — no more frustrating Heisenbugs. You can check the cryptominisat.h file for all the different individual timeouts and memouts you can set.

Under the hood you also get a lot of tricks implemented by default. You don’t have to worry about e.g. strengthening running out of control, it will terminate in reasonable amount of ticks, even if that means it will not run to completion. And the next time you run it, it will start at a different point. This makes a big difference in case you actually want your tool to be usable, rather than just “publish and forget”. In many cases, simplification only makes things somewhat faster, and you want to stop performing the simplification after some time, but you also want your users to be able to report bugs and anomalies. If the system didn’t have timeouts, you’d run the risk of the simplifier running way too long, even though the actual solving would have taken very little time. And if the timeout was measured in seconds, you’d run the risk of a bug being reported but being irreproducible, because the exact moment the timeout hit for the bug to occur would be irreproducible.

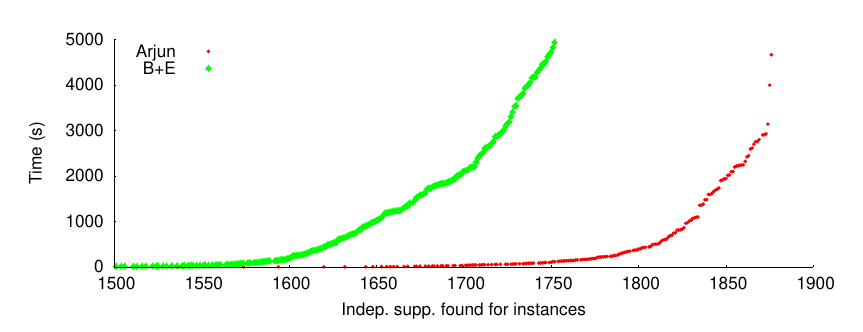

Making the Best of it All

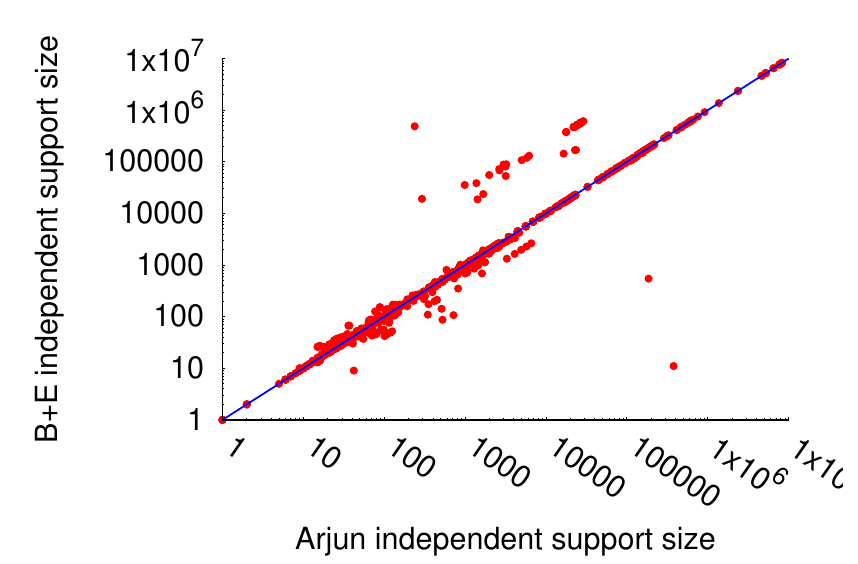

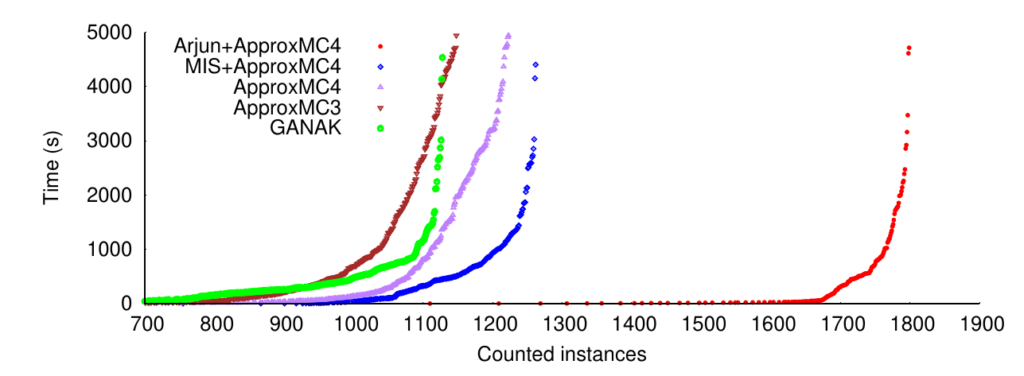

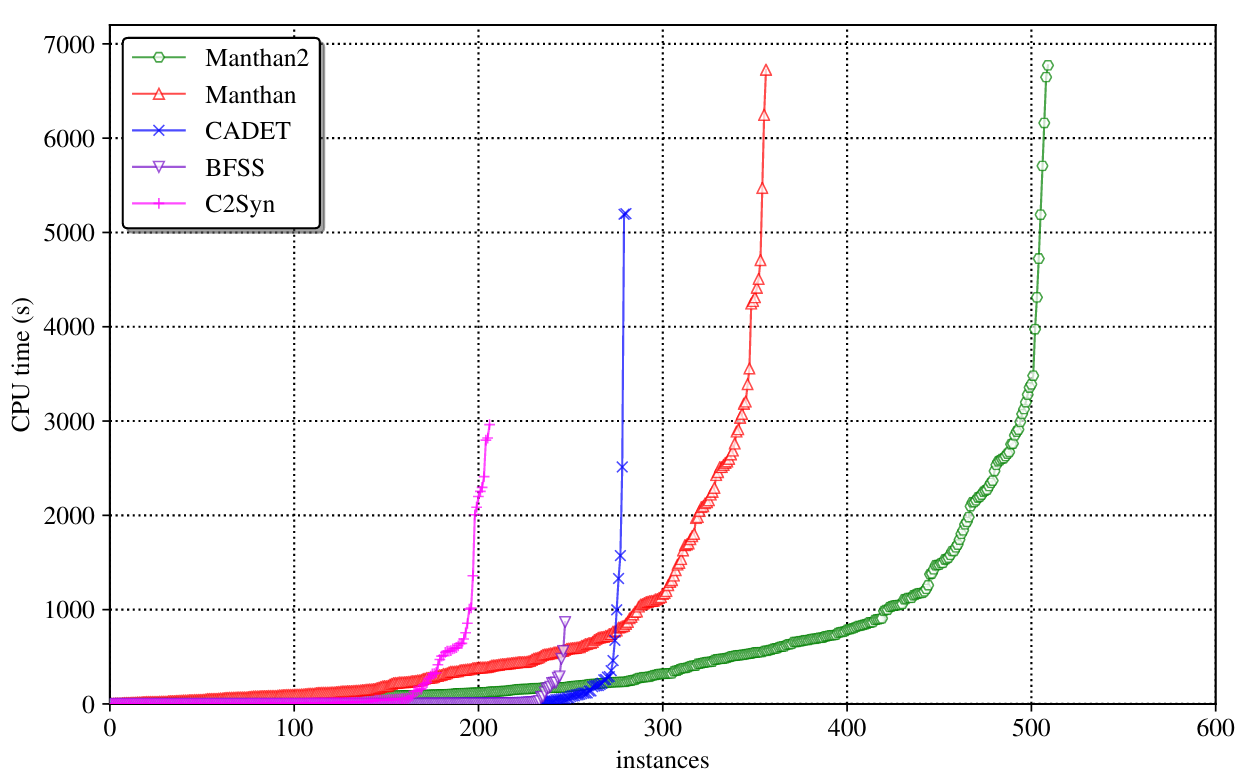

This system is just an API — it doesn’t do much on its own. You need to play with it, and creatively compose simplifications. If you take a look at cryptominisat.h, it already has a cool trick, where it moves the simplified CNF from an existing solver to a new, clean solver through the API, called copy_simp_solver_to_solver(). It is also used extensively in Arjun, our CNF simplifier for counting. There, you can find the function that controls CryptoMiniSat from the outside to simplify the CNF in the exact way needed. It may be worthwhile reading through that function if you want to control CryptoMiniSat via this API.

The simplify() API can give you the redundant clauses, too (useful if you e.g. did ternary or hyper-binary resolution), and can give you the non-renumbered CNF as well — check out the full API in cryptominisat.h, or the Arjun code. Basically, there is a red and a simplified parameter you can pass to this function.

Perhaps I’ll expose some of this API via the Python interface, if there is some interest for it. I think it’s quite powerful and could help people who use CNFs in other scenarios, such as MaxSAT solving, core counting, core minimization, etc.

Closing Thoughts

I think there is currently a lack of tooling to perform the already well-known and well-documented pre- and inprocessing steps that many SAT solvers implement internally, but don’t expose externally. This API is supposed to fill that gap. Although it’s a bit rough on the edges sometimes, hopefully it’s something that will inspire others to either use this API to build cool stuff, or to improve the API so others can build even cooler stuff. While it may sound trivial to re-implement e.g. BVE, once you start going into the weeds of it (e.g. dealing with the special case of detecting ITE, OR & AND gates’ and their lower resolvent counts, or doing it incrementally with some leeway to allow clause number increase), it gets pretty complicated. This API is meant to alleviate this stress, so researchers and enthusiasts can build their own simplifier given a set of working and tested “LEGO bricks”.