Last time I gave a talk, I got some quite deserved fire about the way I approach SAT solving: in a more practical than scientific way. So, to give some food for thought for those who wish to approach SAT from a more scientific viewpoint, and to demonstrate to what lengths I have gone to give (at least to myself) a scientific viewpoint, let me talk a little bit about a fun fact that I have found. The fun fact, as is quite usual for SAT, seems entirely trivial, even banal, but has somehow (so far) eluded proper explanation for me. Maybe you will be the one to give the right explanation :)

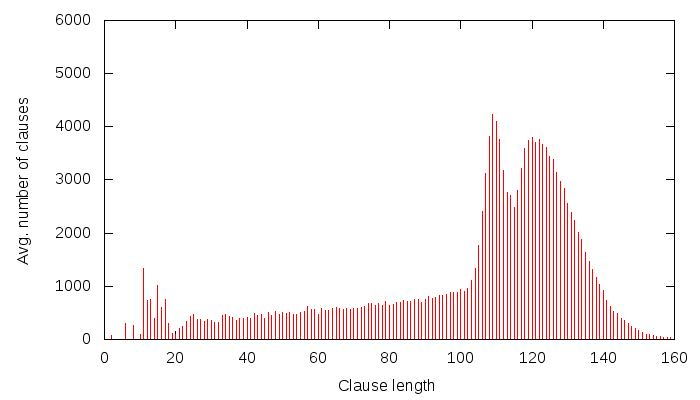

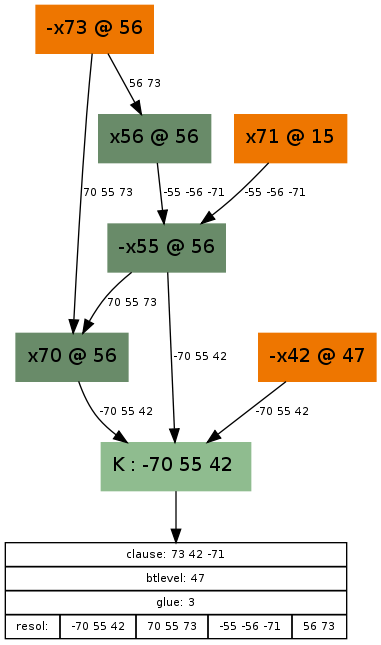

So, I generate a Grain cipher instance using, e.g. the Grain-of-Salt tool that I developed. If you don’t want to generate these yourself, there are a couple (multi-hundred thousand) pre-generated such problems you can download from here. I launch MiniSat 2.1 “core”(i.e. the most simple version) on these problems with a little twist: I write to a file the length of each generated learnt clause. Now, there are two kinds of CNF files I use: one that has 60 randomly picked randomly set state variables, and another one that is plain, and doesn’t have any variables set. Obviously, the CNF that has variables set should be easier to solve, and if Grain is a proper cipher, it should be about 2^60 easer to solve. Now, I print the average learnt clause length that I observe for the CNF that has 0 variables set, and I get this graph:

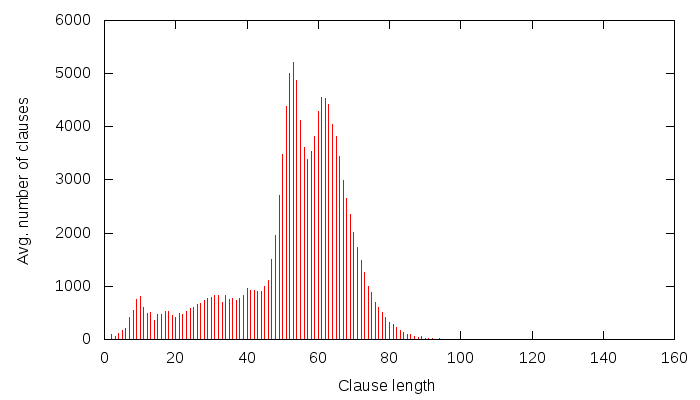

I am sure one could sing long and elaborate songs about this graph, but instead, I will just say: it has a strange curvy thing at around clause length 120. Now, I will generate a similar graph, but this time, I will use a CNF that has 60 state (i.e. important) variables set. Note that this should indeed be 2^60 times easier to solve (since Grain is a nice cipher, and my CNF generation abilities aren’t that poor). So the same graph for this CNF looks like this:

All right. This one, as I am sure you have noticed, has a curvy thing at around 60. Using elementary math, 120-60 = 60. Right, so one could reason: well, there were X unknowns, I have set 60 of them, now there are only X-60 left, so the average clause length should be 60 less! That I think doesn’t explain much, if anything, however. First off, the number of unknowns were in fact 160 — that’s the unknown state of Grain that we were trying to solve. Second, the learnt clauses don’t only contain state variables… in fact, a lot of variables they contain are not state variables at all! Furthermore, why should the 1st UIP scheme be so exact about clause sizes? After all, it’s just a graph-analysis algorithm working at the local conflict — it has no clue about the problem it is working with, much less about the number of state bits set in our instance of Grain.

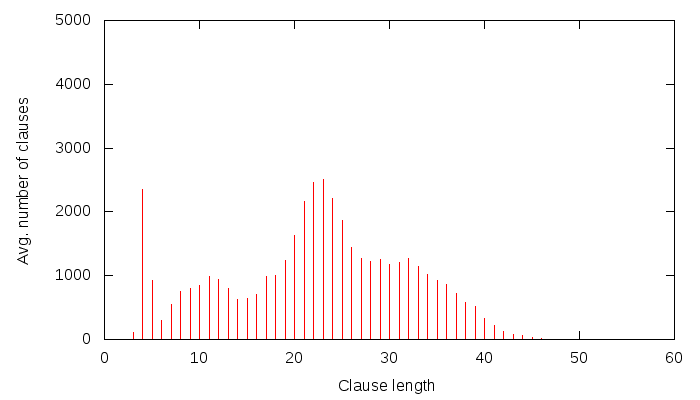

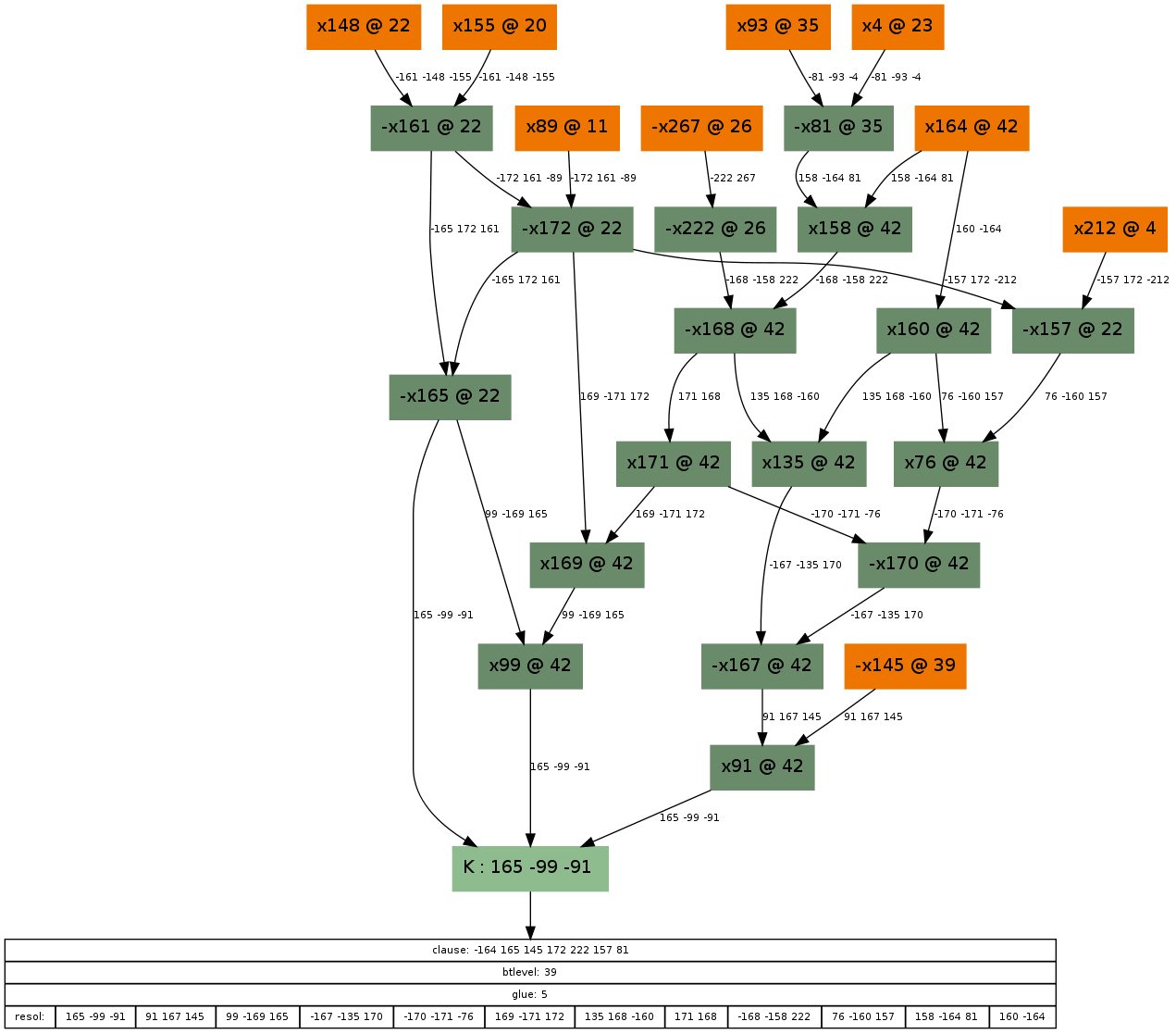

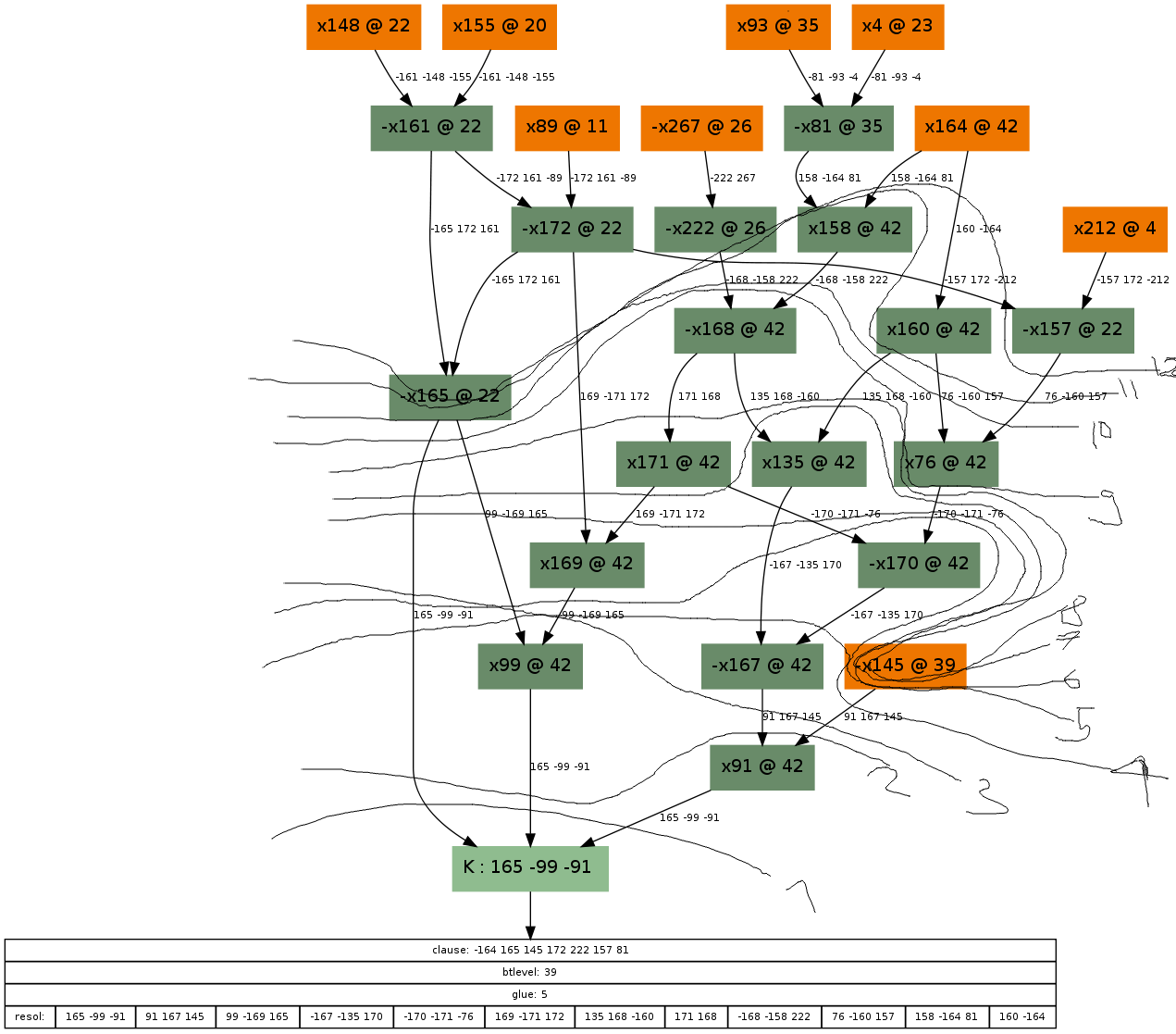

One could say that this is just a dumb example, and it has no real meaning. Maybe I just stumbled upon it, after all, 120/2 = 60, etc. However, this doesn’t seem to be the case. I can, in fact, reproduce this for a lot of X’s for any of the following ciphers: Grain, Trivium, Bivium, HiTag2 and Crypto-1. Let me show one from HiTag2. Again, we are solving for the state, and we don’t set any state bits (i.e. this is the full HiTag2 problem):

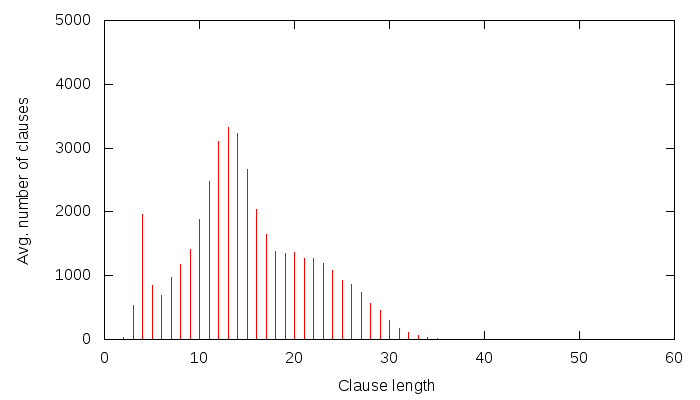

Okay, there is a bumpy thing at around 23. Now, let’s set 10 randomly picked state variables to random values, and run the example again:

There seems to be a bumpy thing at around 13. 23-10 = 13. That’s about right.

By the way, I have stumbled upon the above fun fact about 2 years ago (well before CryptoMiniSat was born), when working on my diploma thesis — these graphs are actually taken verbatim from my thesis. I have worked a lot on SAT in the past 2 years, but this has been haunting me ever since. Maybe someone could explain it to me?

PS: Does anyone know if someone has done an in-depth analysis of learnt clause length distributions, maybe even three-dimensional, showing the time on the Z axis? It would be awesome to do that, if it hasn’t been done yet!