I have been using the AMD’s OpenCL platform for a while now, so I’ll try to write a little overview of what I think is good and bad about OpenCL in general and AMD’s OpenCL implementation in particular.

I have been using the AMD’s OpenCL platform for a while now, so I’ll try to write a little overview of what I think is good and bad about OpenCL in general and AMD’s OpenCL implementation in particular.

Update: AMD was working on the issues below, and temporarily fixed some of them. They are broken again, and I gave up trying to help them. They would need more engineers to fix these issues on a permanent basis.

The good

- The speed of execution is unbeatable. I can easily get 10x better speeds than current top-of-the-line desktop CPUs. And that’s not just saving on buying the CPU and all the peripherals (memory, motherboard, etc.), but also on power, which can easily be more expensive than the upfront cost of new machines

- Once a C/C++ template code is written, OpenCL is easy to start using. There are no difficult systems to master from the main program’s perspective, only a couple of well-named functions like clEnqueueWriteBuffer

- The OpenCL documentation from Khronos is very well written. They even provide a handy reference card that is well made, too

- There are many books available. I’ve bought The OpenCL programming guide, though I got bored from its first part (describing the specification), and never got to the supposedly interesting second part, so I can’t yet recommend it

- The AMD programming guide is simply great. It lists all the important factors like register usage of kernel vs. register-limited wavefronts on the compute unit. It is basically a compressed bible, written by someone extremely knowledgeable in the subject, probably some core AMD engineer(s). I highly recommend reading it

- OpenCL 1.1 supports mulithreading and is easy to multi-thread. I have turned a program that was single-threaded and used only one GPU into a multi-GPU, multi-threaded system in only two days with OpenMP

- The GNU debugger can be used to debug the OpenCL kernel if the CPU is chosen as the target platform. This is extremely useful and considerably cuts down on development time.

So much for the good part. For something that is still in its infancy — it has only been around for about 3.5 years — the above is quite impressive. Apparently, others think it’s impressive, too, as OpenCL is already a leading solution for GPGPU developers. Now let’s get to the bad part.

The bad

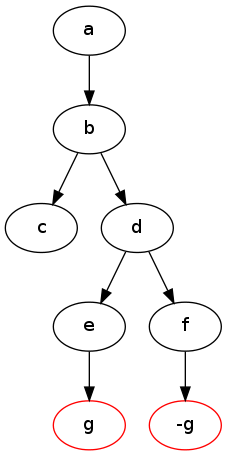

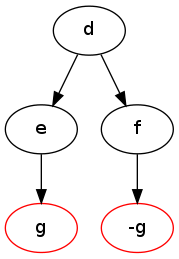

- Warning and errors are sometimes silently eaten. This is very frustrating. For example, I passed a constant memory piece as clCreateBuffer&clEnqueueWriteBuffer, but the size of the buffer was larger than the specified maximum (which for constants is pretty low, a couple of KB). What error message did I get? None. The kernel executed ‘fine’, with the slight glitch that it didn’t do anything, at all, just returned immediately — with CL_SUCCESS, naturally. I have hit this errors-get-eaten bug multiple times, with multiple kinds of bugs. So, if my code doesn’t work, it’s hard to know what is wrong

- AMD supplies debugging and analysis essentially only for Windows and Visual Studio. I have actually shelled out money on a Windows license (first time in my life) only to get these running, but then I realised I would also need a Visual Studio license, for a mere thousand dollars, to use all tools. However, after seeing the greatness of the KernelAnalyzer which I can reliably crash any moment, and gives no information other than what I can already see from the ISA file, I thought… maybe it’s not worth the money

- Valgrind gives a lot of warnings when launched on a program that uses AMD’s OpenCL implementation. This makes debugging a lot harder, because OpenCL copies a lot of data between the GPU and the CPU, and if you got the numbers wrong, there is nothing to tell you why your data is garbage. The best would be if the AMD APP SDK was valgrind-clean, and when instructed to use the CPU as the OpenCL platform it would detect memory access errors even in the OpenCL kernel.

- Global memory usage of the kernel really makes a big difference. I thought that although it’s slow, it’s not a big deal. I was wrong. It’s really slow. I can make my program go 10-20% faster just by replacing something like “x = y; x ^= z;” with “x = y^z”, where “x” is global memory, and “y” and “z” are both registers. This was completely new to me. It also gives you a hint at the intelligence of the ‘optimising’ compiler of the AMD APP SDK. But more on that later.

Now that the bad part is out of the way, it’s time for what’s really ugly.

The ugly

- The ‘optimising’ compiler in the AMD APP SDK can essentially be treated as a random function. It sometimes gives me faster code, sometimes a much slower one, and I can make it switch from one to the other with what essentially amounts to comments in the code. This is like letting a random monster eat your code then treating what came out of the monster as something to benchmark/test. Don’t be surprised if what comes out is, well, quite inedible. This ‘slight’ problem can be evaded if you simply ask the compiler not to ‘optimise’, by passing the “-cl-opt-disable” option. Sometimes optimisation helps, but when debugging performance problems, it’s best to get rid of it. My current main setup doesn’t use the optimiser and I get approx 3x faster code this way. My other code is speeded up by a factor of 1.8x with the optimiser — though it’s only a slow, sort-of backup implementation anyway

- You have to copy-paste code. Last time I copy-pasted code was when I was 12, programming Pascal. The reason for this is that in the function declaration&definition you have to specify whether the parameter is a register, a global, or a constant. Since you might want to use the same function twice, once with a constant parameter, once with a global one, you have no other choice than to copy-paste. Oh, yes, you could use a #define, but I am not sure that’s cleaner in any way. You also have to copy-paste if you want the same function, but with a slightly different ending. Putting an ‘if’ at the diverging point and passing a parameter to control the ‘if’ will be considerably slower (yes, the compiler is that bad)

- AMD’s fglrx dirvers on Linux are about as stable as a house of cards in high wind. If it doesn’t crash, that’s a good day. I can make it crash just by launching a video player, but I have more ‘fancy’ ways (e.g. pushing 2 button combinations) of reliably crashing it, too. However, the ‘radeon’ driver which is stable on Linux doesn’t work with OpenCL.Thanks to this, I actually have to reboot my machine to watch any video — and I thought those days (Windows 95?) were over

- AMD APP SDK on Linux is about as stable as their driver. For example, compiling&launching a program on the 2.6 version uses 150% CPU and forces the kernel to launch 3 kworker(=kernel worker) threads, each eating up 50% CPU, completely using up a 3-core machine. However, the same exact program can be compiled&launched on the 2.5 version, eating exactly 1% CPU and forcing no kworker usage

Conclusions

The OpenCL architecture is clean, usable and user-friendly. Unfortunately, the current AMD OpenCL implementation is bad. I hope this will improve in the future, and as more and more people start using it, the bugs will eventually be reported, triaged, and fixed. As the number of people that buy a particular card for its OpenCL capabilities increase, for instance because games start using OpenCL as a way to speed up certain physics calculations, the makers of these cards will eventually be forced to fix the bugs and make development easier. Until then, we might have to keep on suffering for the speedups we get.

[paypal-donation]

In case you’ve missed it, the

In case you’ve missed it, the